Orchestrate Your Platform

This post is a spinoff of how orchestration can save your spaceship, where I explain how orchestration is useful while traveling through space. Here we are going to check what is orchestration and why you should consider it for your platform.

For a long time we've been deploying software on VMs running in our datacenter or in compute instances in a cloud provider like AWS Azure or GCE. This has worked well for a while but since we need to build the whole deployment infrastructure and deal with the operational overhead of that setup, costs and risk keep growing over time.

Just as an example, a simple OS update in our cluster requires to bring down VMs of our app manually while reducing its performance. If running in a cloud provider we could treat our VMs as disposable compute and delete/create them all the time for an update, with the added monetary cost of having all our VMs as on demand VMs. Here is where an orchestration framework can help us solve these issues, so let's talk about what is it and the advantages it gives us.

What is orchestration?

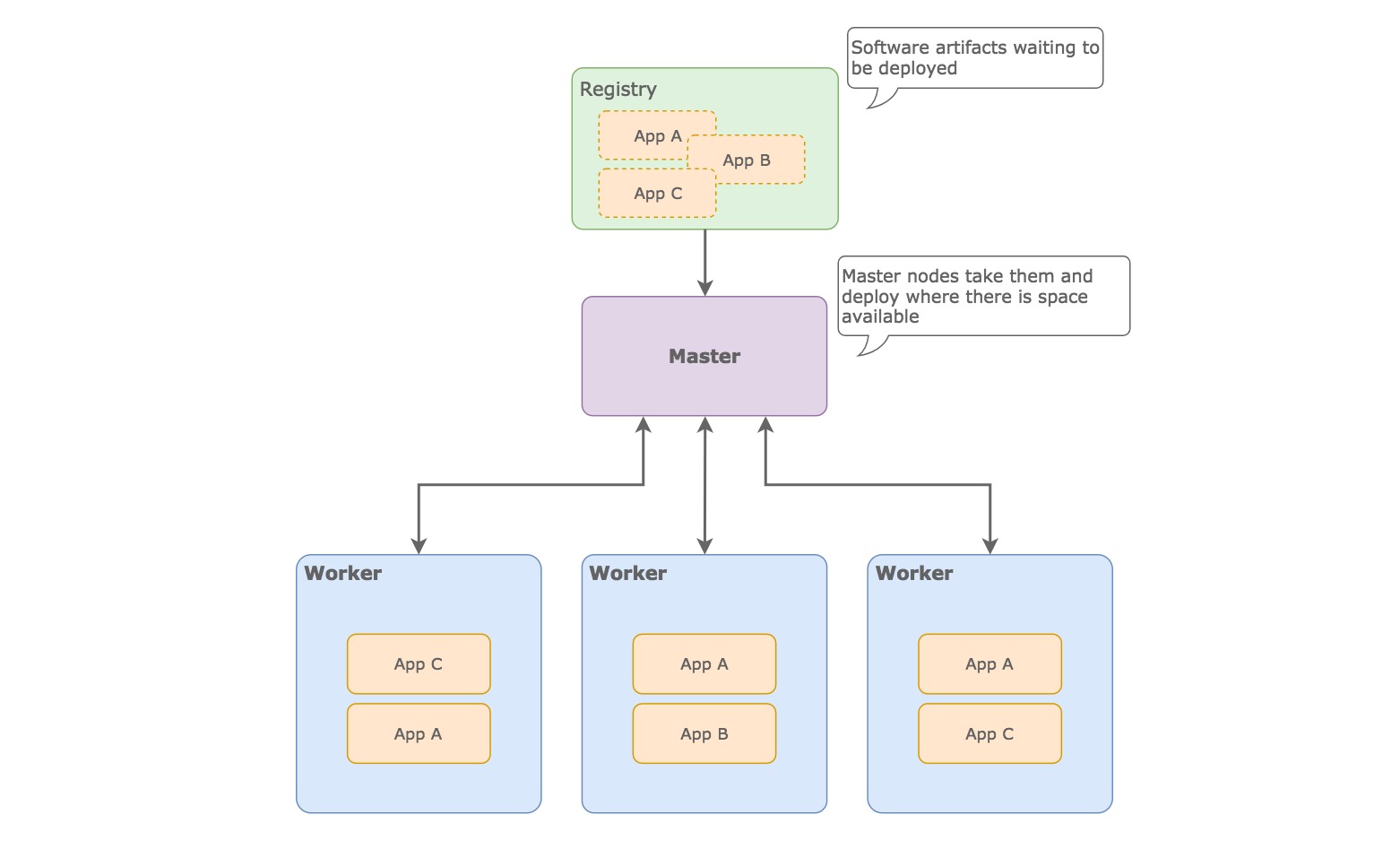

In practice orchestration is the automation of the deployment and monitoring of a platform. To achieve this orchestration frameworks use containers as the artifact to deploy the software in the compute nodes, your application is packaged in a container and this can run in any node, so the orchestrator just have to decide where to place it.

The way they work is slightly different in every framework but normally they are composed of master and worker nodes, where the master nodes are monitoring the state of the workers, and these are the ones who run our applications.

Advantages

The main advantage of an orchestration framework is the removal of the operational cost of a data center while increasing development.

From the operational side, Ops start caring about the automation of the infrastructure and less about the deployment, rollbacks and monitoring, if we take as an example the task of adding a new node in an orchestrated environment, the task is just connecting the new node into the cluster, this node will register itself with the orchestration framework and will start the bootstrap process to start accepting new containers.

Remember the example about updating the OS? in an orchestrated environment the node that is going to update the OS will alert the cluster about this action and will flush its containers to other nodes, then it will update itself and start accepting containers again, all this without human intervention.

From the development side, Dev teams can be decoupled from others making them more autonomous and therefore faster, plus the environment where developers create their apps is way closer to what it is in production, making the development process more reliable, less production surprises, less support and more features. If we take as an example the deployment of a new app version, it is a matter of letting the orchestrator know the new version, and it will reach that artifact and deal with the deployment and rollback if necessary.

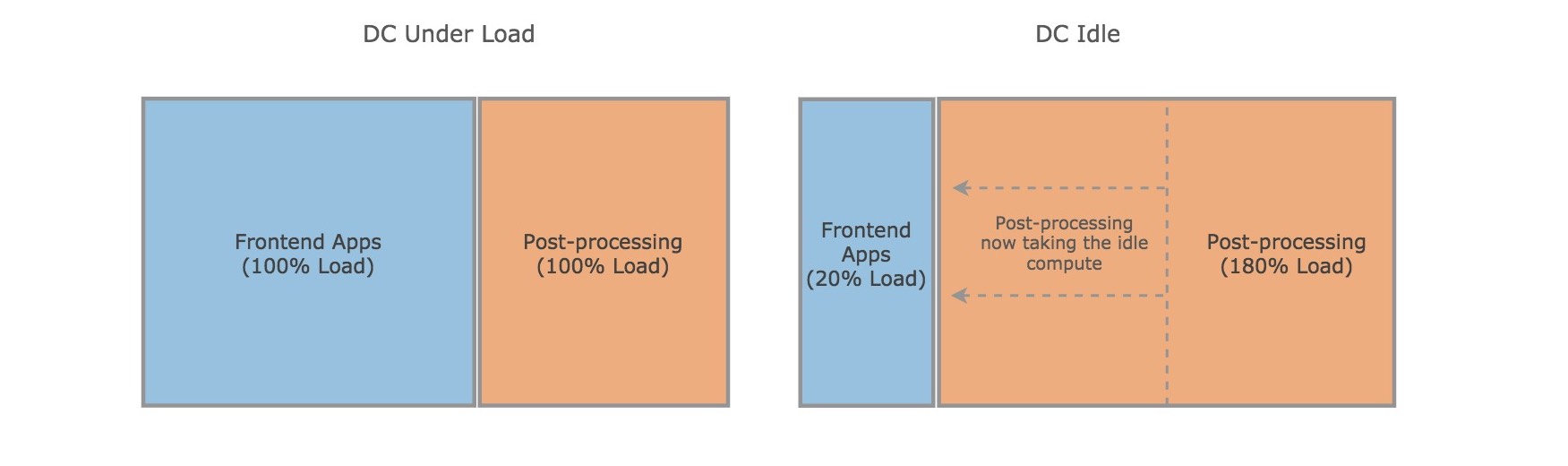

If you are running your own physical data center, orchestration can turn your DC into an elastic cluster, expanding and shrinking sections of your DC depending on the load of each app, it can help you as well on having a hybrid setup running some containers in your physical DC and others in a cloud provider, so if you are running out of space too quickly you can offload that demand to the cloud, having this way the advantages of both worlds, the elasticity, unlimited capacity and lower operational cost plus speed of development.

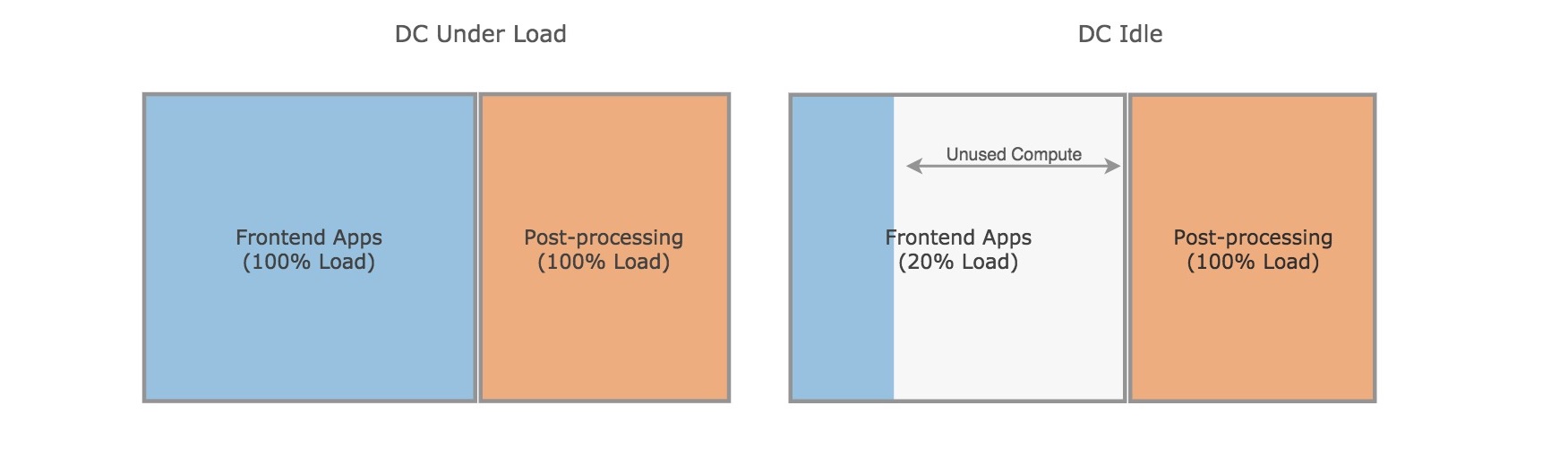

If we take as an example a setup with a frontend application and a post processing application, this is how a normal DC looks like:

We can see there is some idle compute when the DC is not under high load. If we apply orchestration, we can consume that compute in a better way:

Here, the space that wasn't consumed before has been given to the post-processing jobs. Having this way a better usage of your compute.

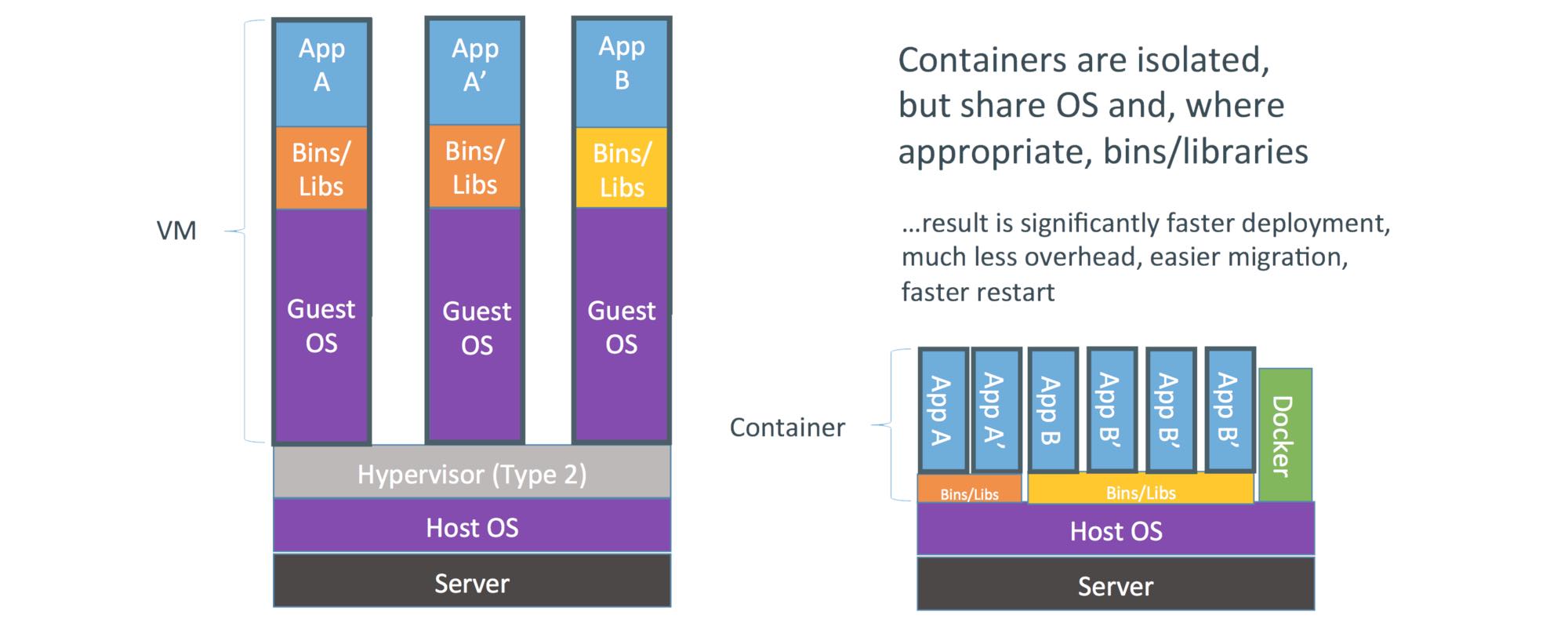

Beside of making your data center elastic, orchestration can reduce the overhead of running a kernel in each of your VMs, this is thanks to the container infrastructure where a container doesn't have to start up a heavy kernel, but only the libs that are needed to run your software.

As you can see in the diagram, we are removing the Guest OS which consumes a big part of the resources and the containers run closer to the Host OS.

There are 3 orchestration frameworks which are leaders in the industry at the time of this writing, each of them with their own advantages:

- Docker Swarm is very minimalist and can run on very limited hardware, you can check Docker Swarm projects running on top of Raspberry Pis.

- Mesos is the old dog in the group and has support for multiple orchestration frameworks like Marathon, Chronos, Hadoop or Spark among others.

- Kubernetes is the new big guy, it is backed by Google and the development on it is insanely fast paced.

The advantage of using one of these orchestration frameworks is that you can tailor it to your needs, having the same advantages of the solutions provided by the cloud providers like Heroku, AWS Elastic Beanstalk or Google App Engine, but without having to adapt to their restrictions.

And here we are only scratching the surface, analyzing how it can save resources and make elastic your platform, we haven't talked about other cool features like auto-scaling patterns, service discovery, SDS or monitoring, which are other good reasons why orchestrated environments are the way to go, but since they differ from solution to solution, it's better to leave them for another post.

I hope you found useful this reading and now you have a better understanding of how orchestration can improve your platform.