Kubernetes Ingress - Simplified

If you want to expose any service running inside Kubernetes there are a couple of ways of doing it, a very handy one is to have an Ingress. In this post we are going to explain ingresses, ingress controllers, ingress definitions and the interaction between them.

We are assuming there's a basic understanding of Kubernetes and familiarity with things like pods and services. To explain this quick we will compare it with the more traditional ways of exposing websites to the internet using Apache, NGINX or any API Gateway.

Let's start.

Ingress

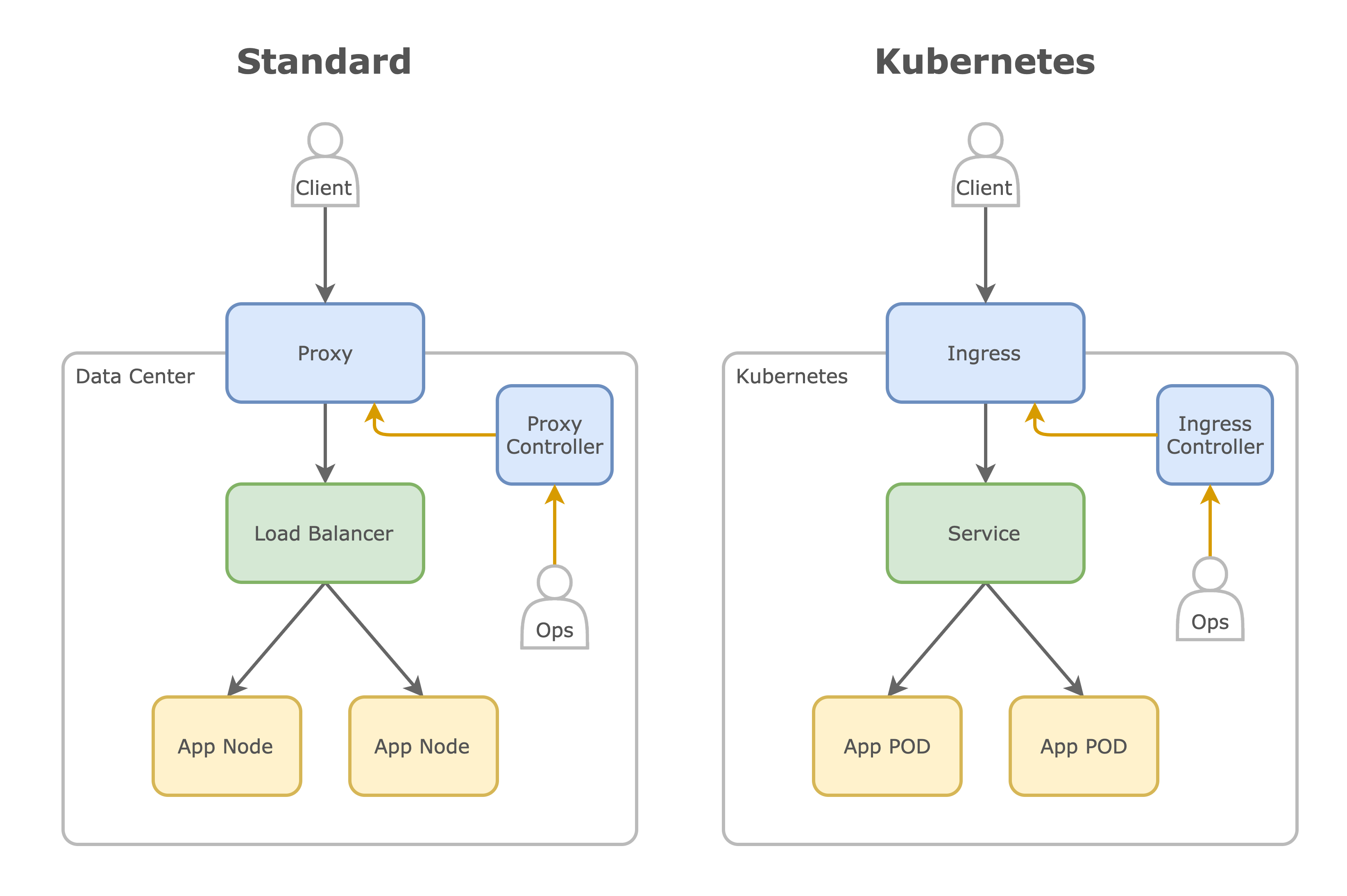

Think of an ingress as the typical reverse proxy we have in standard web deployments pointing to our App running behind the firewall, aka NGINX, HAProxy, Apache, Kong, etc. In this proxy is where we configure things like /account/.* goes to the account service, or /address/.* goes to the address service, and so on.

Ingress Controller

Imagine if in one of those standard deployments we’ve had a component with an API that we can call with some configuration, it validates the config and updates the reverse proxy for us, so we don’t have to log into the server and update config files by hand, in Kubernetes-land this is the ingress controller.

Ingress Definition

Now, to call to that API you would need a resource definition to define things like path, host, port, etc, so it can update the reverse proxy accordingly. This definition in Kubernetess-land is called ingress definition, here's an example of it:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: demo

spec:

rules:

- http:

paths:

- path: /foo

backend:

serviceName: echo

servicePort: 80

Here we are creating an ingress definition that: when a request comes on the path /foo send that call to the service echo on the port 80. This is very simple, we can modify this a bit more, which we will do in this post.

All together

Here we can see a comparison of what it looks like in standard deployments and in Kubernetes, as we can see they are very similar, just that in Kubernetes that Proxy Controller is named Ingress Controller.

In the standard side we called our component Proxy Controller, the controller validates the config and updates the Proxy accordingly. On the Kubernetes side this is our Ingress Controller. After that Clients can make requests to the Proxy or the Ingress and it will proxy the requests to the appropriate Load Balancer or in Kubernetes a Service.

Well done, now we know everything that is to know about ingresses, we can show off and brag all about it. But what kind of person we would be without doing a demo? I think it will be a nice idea to put together these 3 concepts into a small tutorial.

Demo

For this demo we will be deploying an ingress to a kubernetes cluster, we will create a service and the configure the ingress to proxy requests to it.

If you don't have a Kubernetes cluster yet, create one with minikube:

$ minikube start --vm-driver=virtualbox

😄 minikube v1.5.2 on Darwin 10.15.1

🔥 Creating virtualbox VM (CPUs=2, Memory=2000MB, Disk=20000MB) ...

🐳 Preparing Kubernetes v1.16.2 on Docker '18.09.9' ...

💾 Downloading kubeadm v1.16.2

💾 Downloading kubelet v1.16.2

🚜 Pulling images ...

🚀 Launching Kubernetes ...

⌛ Waiting for: apiserver

🏄 Done! kubectl is now configured to use "minikube"

$ kubectl cluster-info

Kubernetes master is running at https://192.168.99.100:8443

KubeDNS is running at https://192.168.99.100:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Keep the Kubernetes Master IP for later.

There are many types of Ingress, here we would be using Kong, since it is not only a reverse proxy, but a fully fledged API Gateway and it is open source. If you haven't played with Kong yet, you can checkout the post Starting with Kong.

For our Ingress Controller we would be using Kong Ingress Controller.

In their github repo, they provide a file to deploy all the necessary components (https://bit.ly/k4k8s). Let's analyze what the yaml file has:

-

CustomResourceDefinitions: They define a couple of CRDs, for the ones who doesn't know what these are, the Kubernetes API is extensible, you can create a new definition called house.mydomain.com to store houses in Kubernetes, and use it later inside the cluster. The folks at Kong Ingress Controller are doing exactly that, so you can configure Kong beyond a simple reverse proxy and have all the bells and whistles of an API GW, like rate limiting, authentication, logging, etc. Here they are creating these:

- kongconsumers.configuration.konghq.com

- kongcredentials.configuration.konghq.com

- kongingresses.configuration.konghq.com

- kongplugins.configuration.konghq.com

-

ServiceAccount: Kube Ingress Controller needs a service account with certain permissions to query the Kubernetes API and update Kong.

-

ClusterRole: These are the permissions we are going to give to that service account

-

ClusterRoleBinding: This one is the link between the ServiceAccount and the ClusterRole

-

ConfigMap: The Kong configuration

-

Service (kong-proxy): This is the external service used to expose Kong to the internet. That's why it has AWS LoadBalancer annotations, we are going to modify this service, since we are not doing this in AWS.

-

Service (kong-validation-webhook): This is an internal service used to query if the configurations provided to Kong are OK.

-

Deployment: This deployment has the 2 containers that we would need:

- proxy: This one is Kong, our API Gateway

- ingress-controller: This one is kong-ingress-controller

Before we go and deploy this file, let's modify the kong-proxy service, so it exposed correctly. I am assuming you are not running inside of a cloud, if you are in AWS, go ahead and deploying as it is. Otherwise let's assume we don't have any LoadBalancer available and we are going to use NodePort which is to expose a port on every Node.

So, save the file locally to modify it.

$ curl -O https://raw.githubusercontent.com/Kong/kubernetes-ingress-controller/master/deploy/single/all-in-one-dbless.yaml

Here is how the kong-proxy service should look like after modification:

apiVersion: v1

kind: Service

metadata:

name: kong-proxy

namespace: kong

spec:

ports:

- name: proxy

port: 80

targetPort: 8000

nodePort: 30036

protocol: TCP

- name: proxy-ssl

port: 443

targetPort: 8443

nodePort: 30037

protocol: TCP

selector:

app: ingress-kong

type: NodePort

---

After that just deploy the file:

$ kubectl apply -f all-in-one-dbless.yaml

namespace/kong created

customresourcedefinition.apiextensions.k8s.io/kongconsumers.configuration.konghq.com created

customresourcedefinition.apiextensions.k8s.io/kongcredentials.configuration.konghq.com created

customresourcedefinition.apiextensions.k8s.io/kongingresses.configuration.konghq.com created

customresourcedefinition.apiextensions.k8s.io/kongplugins.configuration.konghq.com created

serviceaccount/kong-serviceaccount created

clusterrole.rbac.authorization.k8s.io/kong-ingress-clusterrole created

clusterrolebinding.rbac.authorization.k8s.io/kong-ingress-clusterrole-nisa-binding created

configmap/kong-server-blocks created

service/kong-proxy created

service/kong-validation-webhook created

deployment.apps/ingress-kong created

Let's check that all is in order:

# Check deployment

$ kubectl get deployments --namespace=kong

NAME READY UP-TO-DATE AVAILABLE AGE

ingress-kong 1/1 1 1 112s

# Check Custom Resource Definitions

$ kubectl get crd --namespace=kong

NAME CREATED AT

kongconsumers.configuration.konghq.com 2019-12-08T16:05:01Z

kongcredentials.configuration.konghq.com 2019-12-08T16:05:01Z

kongingresses.configuration.konghq.com 2019-12-08T16:05:01Z

kongplugins.configuration.konghq.com 2019-12-08T16:05:01Z

# Check the services

$ kubectl get services --namespace=kong

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kong-proxy NodePort 10.102.30.9 <none> 80:30036/TCP,443:30037/TCP 3m6s

kong-validation-webhook ClusterIP 10.98.194.253 <none> 443/TCP 3m6s

Let's make a request to the Kubernetes master IP on the node port that we configured: Note: if running in a cloud, instead of the master IP, we will use the LoadBalancer IP.

$ curl -i 192.168.99.100:30036

HTTP/1.1 404 Not Found

Date: Sun, 08 Dec 2019 22:18:35 GMT

Content-Type: application/json; charset=utf-8

Connection: keep-alive

Content-Length: 48

Server: kong/1.3.0

{"message":"no Route matched with those values"}

This is Kong telling us that there's no route. To make use of it, let's deploy a pod with a service:

$ curl -sL https://gist.githubusercontent.com/r1ckr/340c2ddee3a500d21af7051a7b9a92bb/raw/86aba5f313a48a8c493f641f59371f3c89c794bd/k8s-echoserver.yml | kubectl apply -f -

service/echo created

deployment.apps/echo created

Now let's create an ingress definition to forward /foo to our echo service

$ echo "

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: demo

spec:

rules:

- http:

paths:

- path: /foo

backend:

serviceName: echo

servicePort: 80

" | kubectl apply -f -

Let's check the logs of our Kong pod:

$ kubectl logs ingress-kong-68f878bbcc-758vq proxy --namespace=kong

...

2019/12/08 23:19:14 [notice] 24#0: *86187 [lua] cache.lua:321: purge(): [DB cache] purging (local) cache, client: 127.0.0.1, server: kong_admin, request: "POST /config?check_hash=1 HTTP/1.1", host: "localhost:8444"

127.0.0.1 - - [08/Dec/2019:23:19:14 +0000] "POST /config?check_hash=1 HTTP/1.1" 201 3827 "-" "Go-http-client/1.1"

...

Here we can see the POST call to the /config path that the ingress controller does every time we post a new ingress definition.

If we make a request to our master node with the /foo path, Kong will successfully redirect that call to our echo service this time.

$ curl -i 192.168.99.100:30036/foo

HTTP/1.1 200 OK

Content-Type: text/plain; charset=UTF-8

Transfer-Encoding: chunked

Connection: keep-alive

Date: Sun, 08 Dec 2019 22:33:07 GMT

Server: echoserver

X-Kong-Upstream-Latency: 0

X-Kong-Proxy-Latency: 2

Via: kong/1.3.0

Hostname: echo-85fb7989cc-ptjzl

...

That's it, we've successfully created a route in our Ingress (Kong) and now we are able to call our services from the outside of our cluster. Remember those Custom Resource Definitions? Checkout the Kong Ingress Controller and see how we can install different Kong plugins.

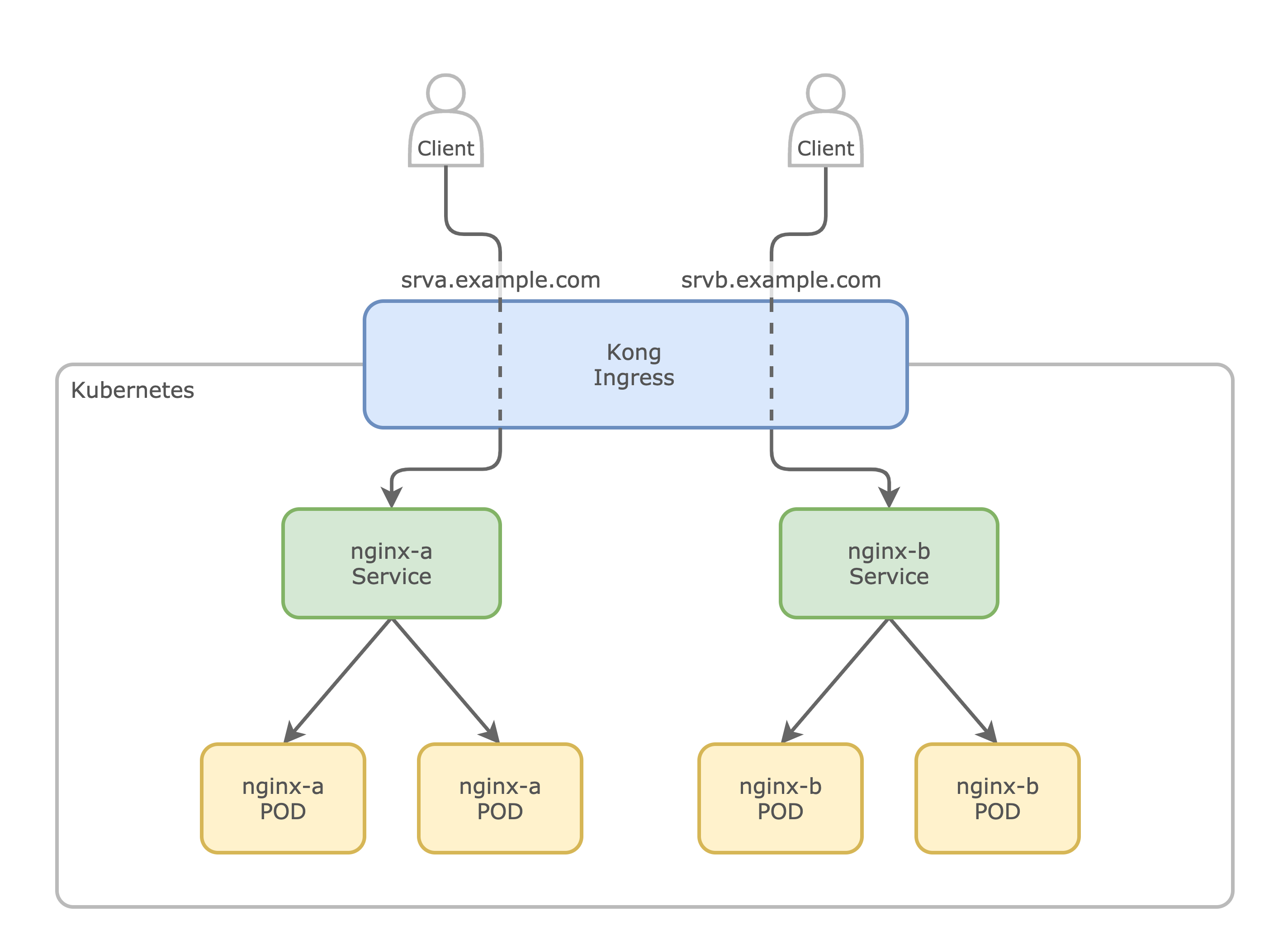

Bonus track: DNS subdomains ingresses

As an extra lets see how can we route different domains to different services with the same Ingress. To do that, we would create 2 AAA records pointing to the same Ingress IP, then configure the ingress to redirect the requests based on the host. Here's what we are going to do:

So, let's start by deploying 2 Pods with their services inside of our Kube cluster.

# Service A

curl -sL https://gist.githubusercontent.com/r1ckr/e57808fe73a5b3e26d6cedfed4562af0/raw/dd432168970d2ce39b59776fa2f82007f584a2a3/k8s-nginx-a.yml | kubectl apply -f -

# Service B

curl -sL https://gist.githubusercontent.com/r1ckr/e4590c269774956f5907dc1af7e289c4/raw/d057e47f9b8dd5b7ef0155ee7f5f955529d70e36/k8s-nginx-b.yml | kubectl apply -f -

Now let's create 2 ingress definitions to expose those services depending on the domain:

# Service A

$ echo "

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: service-a

spec:

rules:

- host: srva.example.com

http:

paths:

- path: /foo

backend:

serviceName: nginx-a

servicePort: 80

" | kubectl apply -f -

ingress.extensions/service-a created

# Service B

$ echo "

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: service-b

spec:

rules:

- host: srvb.example.com

http:

paths:

- path: /foo

backend:

serviceName: nginx-b

servicePort: 80

" | kubectl apply -f -

ingress.extensions/service-b created

Pay attention to the new host attribute, there we are instructing the Ingress to send the data only if the host matches that.

Let's call the Master IP with the srva.example.com domain:

$ curl -H "Host: srva.example.com" 192.168.99.100:30036/foo

{"message": "This is NGINX A"}

Now, let's call with the srvb.example.com domain and see the response from the other service:

$ curl -H "Host: srvb.example.com" 192.168.99.100:30036/foo

{"message": "This is NGINX B"}

You can use wildcards too, in case you want to have dynamic subdomains, eg:

$ echo "

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: wildcard-domain

spec:

rules:

- host: '*.demos.example.com'

http:

paths:

- path: /foo

backend:

serviceName: nginx-b

servicePort: 80

" | kubectl apply -f -

ingress.extensions/wildcard-domain created

Here we are telling the Ingress to send any subdomain of .demos.example.com to service-b, let's test that:

curl -H "Host: monday.demos.example.com" 192.168.99.100:30036/foo

{"message": "This is NGINX B"}

curl -H "Host: tuesday.demos.example.com" 192.168.99.100:30036/foo

{"message": "This is NGINX B"}

And this is how you can use the same Ingress controller to serve multiple sites or services, which is the main purpose of it. There's more config that you can set in an Ingress Definition, so go ahead and explore the Kubernetes Documentation. And remember that we've created some Custom Resource Definitions for Kong, so we can extend beyond the standard ingress definition with those. I Hope you've found this post useful and now you are on your way to become a K8s ninja.